Difference between revisions of "StarCluster"

Terry Gliedt (talk | contribs) |

|||

| (38 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | Back to the beginning [ | + | __TOC__ |

| + | |||

| + | Back to the beginning: [[GotCloud]] | ||

| + | |||

| + | Back to [[GotCloud: Amazon]] | ||

If you have access to your own cluster, your task will be much simpler. | If you have access to your own cluster, your task will be much simpler. | ||

| − | Install the | + | Install the GotCloud software ([[GotCloud: Source Releases]]) |

| − | and run it as | + | and run it as described on the same pages. |

| − | For those who are not so lucky to have access to a cluster, AWS provides an alternative. | + | For those who are not so lucky to have access to a cluster, Amazon Web Services (AWS) provides an alternative. |

| − | You may run the | + | You may run the gotcloud software on a cluster created in AWS. |

One tool that makes the creation of a cluster of AMIs (Amazon Machine Instances) is | One tool that makes the creation of a cluster of AMIs (Amazon Machine Instances) is | ||

'''StarCluster''' (see http://star.mit.edu/cluster/). | '''StarCluster''' (see http://star.mit.edu/cluster/). | ||

| − | The following shows an example of how you might use '' | + | The following shows an example of how you might use ''StarCluster'' to create an AWS cluster and set it up to run GotCloud. |

| − | |||

| − | |||

| − | |||

There are many details setting up starcluster and this is ''not'' intended to explain all | There are many details setting up starcluster and this is ''not'' intended to explain all | ||

of the many variations you might choose, but should provide you a working example. | of the many variations you might choose, but should provide you a working example. | ||

| − | |||

| − | + | == Getting Started With StarCluster == | |

| − | + | StarCluster provides lots of documentation (http://star.mit.edu/cluster/) which will provide more information on it than we have here. | |

| − | |||

| − | |||

| − | |||

| − | + | To install and setup StarCluster for the first time, you can follow the QuickStart instructions: http://star.mit.edu/cluster/docs/latest/quickstart.html | |

| − | + | * Includes installation instructions | |

| + | ** See http://star.mit.edu/cluster/docs/latest/installation.html for more detailed StarCluster installation instructions if the QuickStart instructions are not enought (especially if running on Windows) | ||

| + | * Includes setting up a basic StarCluster configuration file | ||

| + | ** You will need your AWS Credentials to setup the configuration file | ||

| + | *** If you need help setting up your AWS credentials, see: [[AWS Credentials]] | ||

| + | You can skip actually starting the cluster in the QuickStart instructions if you want. | ||

| + | |||

| + | ''' Troubleshooting: ''' When I tried this, the <code>starcluster start mycluster</code> step failed similar to: | ||

| + | * http://star.mit.edu/cluster/mlarchives/2425.html | ||

| + | * So I followed the suggestions there and at https://github.com/jtriley/StarCluster/issues/455: | ||

| + | *# <pre>$ sudo pip uninstall boto</pre> | ||

| + | *# <pre>$ sudo easy_install boto==2.32.0</pre> | ||

| + | *#* I was having trouble with pip install, but found that easy_install worked | ||

| + | * I had to force terminate mycluster after a failed start: | ||

| + | ** <pre> starcluster terminate -f mycluster</pre> | ||

| + | * Then I was able to successfully start my cluster | ||

| + | |||

| + | ''' Don't forget to terminate your cluster:''' | ||

| + | starcluster terminate mycluster | ||

| − | |||

| − | StarCluster | + | == StarCluster and GotCloud == |

| − | + | === StarCluster Config Settings === | |

| − | |||

| − | + | By default, StarCluster expects a configuration file in ~/.starcluster/config. | |

| − | + | * StarCluster will create a model file for you | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Ensure your StarCluster configuration file is set for your usage. | |

| − | + | * General AWS Settings: | |

| − | + | [aws info] | |

| − | [aws info] | + | aws_access_key_id = #your aws access key id here |

| − | + | aws_secret_access_key = #your secret aws access key here | |

| − | + | aws_user_id = #your 12-digit aws user id here | |

| − | + | * You should have set these in [[#Getting Started With StarCluster|Getting Started With StarCluster]] above (quickstart guide and AWS Credentials) . | |

| − | + | * GotCloud Cluster Definition | |

| − | + | ** You may want to create a new cluster section for running GotCloud (or you can use smallcluster) in your configuration file: <code>~/.starcluster/config</code> | |

| − | + | ** You can call it anything you want, for example, <pre>gccluster</pre> | |

| + | ** Example: | ||

| + | **: <pre>[cluster gccluster] KEYNAME = mykey CLUSTER_SIZE = 4 CLUSTER_USER = sgeadmin CLUSTER_SHELL = bash MASTER_IMAGE_ID = ami-6ae65e02 NODE_IMAGE_ID = ami-3393a45a NODE_INSTANCE_TYPE = m3.large</pre> | ||

| + | *** Set <code>KEYNAME</code> to the key you want to use | ||

| + | *** Set <code>CLUSTER_SIZE</code> to the number of nodes you want to start up (this may be different than 4) | ||

| + | *** Set <code>CLUSTER_USER</code> to add additional users, like <code>sgeadmin</code> | ||

| + | *** Set <code>CLUSTER_SHELL</code> to define the shell you want to use, like <code>bash</code> | ||

| + | *** Set <code>MASTER_IMAGE_ID</code> to the latest GotCloud AMI, see: [[GotCloud: AMIs]] | ||

| + | **** Contains GotCloud, the reference, and the demo files in the /home/ubuntu/ directory that will be visible on all nodes in the cluster | ||

| + | **** Has a 30G volume, but only 6G available | ||

| + | *** Set <code>NODE_IMAGE_ID</code> to a StarCluster <code>ubuntu x86_64</code> AMI | ||

| + | **** Since each node does not need its own 30G volume containing GotCloud, the reference, and the demo files, we use a separate image for the nodes. | ||

| + | *** The nodes can just access the master's copy of GotCloud, the reference, and the Amazon demo | ||

| + | *** Set <code>NODE_INSTANCE_TYPE</code> to the type of instances you want to start in your cluster | ||

| + | **** See http://aws.amazon.com/ec2/pricing/ for instance descriptions and prices | ||

| + | **** We do not recommend running GotCloud on machines with less than 4MB of memory | ||

| + | ** The <code>CLUSTER_SIZE</code> * CPUs in <code>NODE_INSTANCE_TYPE</code> = the number of jobs you can run concurrently in GotCloud | ||

| − | + | * Define Data Volumes | |

| − | + | ** By default, the GotCloud AMI contains about 5G of extra space that you can use | |

| − | + | *** /home/ubuntu/ directory is visible from all machines | |

| − | + | **** Use /home/ubuntu/ for the output directory if it is <5G | |

| − | + | **** This directory will be deleted when you terminate the AMI | |

| + | ** Create your Own Volumes and attach them to the GotCloud cluster | ||

| + | *** '''Instructions TBD''' | ||

| − | [ | + | === Starting the Cluster === |

| − | + | # Start the cluster: | |

| + | #* <pre>starcluster start -c gccluster mycluster</pre> | ||

| + | #** Alternatively, if you can the default template at the start of the configuration file in the <code>[global]</code> section to gccluster: <code>DEFAULT_TEMPLATE=gccluster</code>, you can run: | ||

| + | #*** <pre>starcluster start mycluster</pre> | ||

| + | #* It will take a few minutes for the cluster to start | ||

| − | |||

| − | |||

| + | === Copying Data to/from the Cluster === | ||

| + | Copy data onto the cluster (command run from your local machine) | ||

| + | starcluster put /path/to/local/file/or/dir /remote/path/ | ||

| − | + | Pull the data from the cluster onto your local machine (command run from your local machine) | |

| − | + | starcluster get /path/to/remote/file/or/dir /local/path/ | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | '''Reminder, if you write your output to /home/ubuntu/, it will be deleted when you terminate the cluster''' | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | === Running GotCloud on StarCluster === | |

| − | # | + | * If you have not already, logon to the cluster as ubuntu: |

| − | + | ** <pre>starcluster sshmaster -u ubuntu mycluster</pre> | |

| + | *** Type <code>yes</code> if the terminal asks if you want to continue connecting | ||

| + | * When running GotCloud: | ||

| + | ** Set the cluster/batch type in either configuration or on the command line: | ||

| + | *** In Configuration: | ||

| + | ***: <pre>BATCH_TYPE = sgei</pre> | ||

| + | *** On the command-line: | ||

| + | ***: <pre>--batchtype sgei</pre> | ||

| + | ** Set the number of jobs to run: | ||

| + | **: <pre>--numjobs #</pre> | ||

| + | *** Replace number with the number of concurrent jobs you want to run (probably <code>CLUSTER_SIZE</code> * CPUs in <code>NODE_INSTANCE_TYPE</code>) | ||

| + | ** Otherwise, run GotCloud as you normally would. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | To login to a specific non-master node, do: | |

| − | + | starcluster sshnode -u ubuntu mycluster node001 | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | === Monitoring Cluster Usage === | |

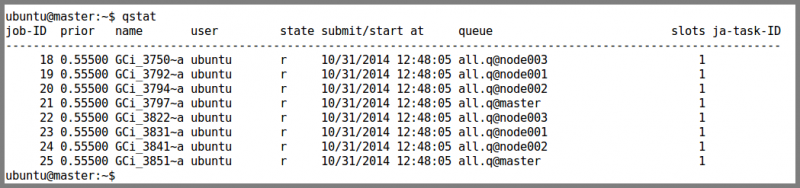

| − | + | * Monitor jobs in the queue | |

| − | + | ** <pre>qstat</pre> | |

| − | + | ** This will show you how the currently running jobs and how they are spread across the nodes in your cluster | |

| − | + | **:[[File:Qstat.png|800px]] | |

| − | + | *** state descriptions: | |

| − | + | **** <code>qw</code> : queued and waiting (not yet assigned to a node) | |

| − | + | **** <code>r</code> : running | |

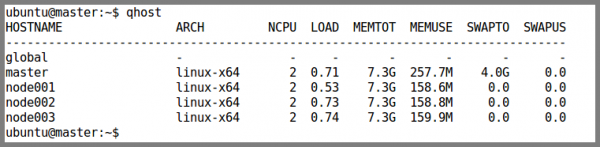

| + | * View Sun Grid Engine Load | ||

| + | ** <pre>qhost</pre> | ||

| + | **:[[File:Qhost.png|600px]] | ||

| + | *** ARCH : architecture | ||

| + | *** NCPU : number of CPUs | ||

| + | *** LOAD : current load | ||

| + | *** MEMTOT : total memory | ||

| + | *** MEMUSE : memory in use | ||

| + | *** SWAPTO : swap space | ||

| + | *** SWAPUS : swap space in use | ||

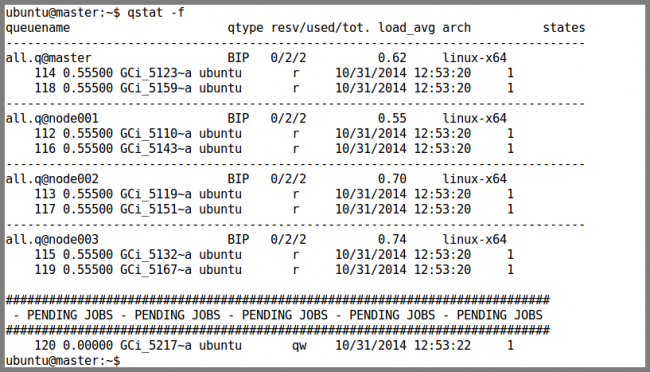

| + | * View the average load per node using: | ||

| + | ** <pre>qstat -f</pre> | ||

| + | **:[[File:Qstatf.png|650px]] | ||

| + | *** <code>load_avg</code> field contains the load average for each node | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | # | + | === Terminate the Cluster === |

| − | # | + | # Reminder, check if you need to copy any data off of the cluster that will be deleted upon termination |

| − | # | + | #* [[#Copying Data to/from the Cluster|Copying Data to/from the Cluster]] |

| − | # | + | # Terminate the cluster |

| − | # | + | #* <pre>starcluster terminate mycluster</pre> |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | == Run GotCloud Demo Using StarCluster == | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | # | + | #Create a new cluster section in your configuration file: <code>~/.starcluster/config</code> |

| − | # [ | + | #* Add the following to the end of the configuration file: |

| − | # | + | #*: <pre>[cluster gccluster] KEYNAME = mykey CLUSTER_SIZE = 4 CLUSTER_USER = sgeadmin CLUSTER_SHELL = bash MASTER_IMAGE_ID = ami-6ae65e02 NODE_IMAGE_ID = ami-3393a45a NODE_INSTANCE_TYPE = m3.large</pre> |

| − | # | + | # Start the cluster: |

| + | #* <pre>starcluster start -c gccluster mycluster</pre> | ||

| + | #** Alternatively, if you can the default template at the start of the configuration file in the <code>[global]</code> section to gccluster: <code>DEFAULT_TEMPLATE=gccluster</code>, you can run: | ||

| + | #*** <pre>starcluster start mycluster</pre> | ||

| + | #* It will take a few minutes for the cluster to start | ||

| + | # Logon to the cluster as ubuntu: | ||

| + | #* <pre>starcluster sshmaster -u ubuntu mycluster</pre> | ||

| + | #** Type <code>yes</code> if the terminal asks if you want to continue connecting | ||

| − | + | {{GotCloud: Amazon Demo Setup|hdr=====}} | |

| − | |||

| − | |||

| − | |||

| − | # | + | ==== Run GotCloud SnpCall Demo ==== |

| − | # | + | # Run GotCloud snpcall |

| − | # | + | #* <pre>gotcloud snpcall --conf example/test.conf --outdir output --numjobs 8 --batchtype sgei</pre> |

| − | # [ | + | #** The ubuntu user is setup to have the gotcloud program and tools in its path, so you can just type the program name and it will be found |

| − | # | + | #** There is enough space in /home/ubuntu to put the Demo output |

| − | # | + | #*** /home/ubuntu is visible from all nodes in the cluster |

| − | # | + | #** This will take a few minutes to run. |

| + | #** GotCloud first generates a makefile, and then runs the makefile | ||

| + | #** After a while GotCloud snpcall will print some messages to the screen. This is expected and ok. | ||

| + | # See [[#Monitoring Cluster Usage|Monitoring Cluster Usage]] if you are interested in monitoring the cluster usage as GotCloud runs | ||

| + | # When complete, GotCloud snpcall will indicate success/failure | ||

| + | #* Look at the snpcall results, see: [[GotCloud:_Amazon_Demo#Examining_SnpCall_Output|GotCloud: Amazon Demo -> Examining SnpCall Output]] | ||

| − | ### | + | ==== Run GotCloud Indel Demo ==== |

| − | # | + | # Run GotCloud indel |

| − | # | + | #* <pre>gotcloud indel --conf example/test.conf --outdir output --numjobs 8 --batchtype sgei</pre> |

| − | # | + | #** The ubuntu user is setup to have the gotcloud program and tools in its path, so you can just type the program name and it will be found |

| − | # | + | #** There is enough space in /home/ubuntu to put the Demo output |

| − | # | + | #*** /home/ubuntu is visible from all nodes in the cluster |

| − | # | + | #** This will take a few minutes to run. |

| + | # See [[#Monitoring Cluster Usage|Monitoring Cluster Usage]] if you are interested in monitoring the cluster usage as GotCloud runs | ||

| + | # When complete, GotCloud indel will indicate success/failure | ||

| + | #* Look at the indel results, see: [[GotCloud:_Amazon_Demo#Examining_Indel_Output|GotCloud: Amazon Demo -> Examining Indel Output]] | ||

| − | # | + | ==== Terminate the Demo Cluster ==== |

| − | # | + | # Exit out of your master node |

| − | # | + | #* <pre>exit</pre> |

| − | + | # Terminate the cluster | |

| − | + | #* Since this is just a demo, we don't have to worry about the data getting deleted upon termination | |

| + | #* <pre>starcluster terminate mycluster</pre> | ||

| + | #** Answer <code>y</code> to the questions <code>Terminate EBS cluster mycluster (y/n)? </code> | ||

| − | + | == Old Instructions== | |

| − | + | '''StarCluster Configuration Example''' | |

| − | |||

| − | |||

| − | |||

| − | + | StarCluster creates a model configuration file in ~/.starcluster/config and you are instructed | |

| − | + | to edit this and set the correct values for the variables. | |

| − | + | Here is a highly simplified example of a config file that should work. | |

| − | + | Please note there are many things you might want to choose, so craft the config file with care. | |

| − | + | You'll need to specify nodes with 4GB of memory (type m1.medium) and make sure each node has access to the input and output data for the step being run. | |

| − | |||

| − | # | + | <code> |

| − | # [ | + | #################################### |

| − | # | + | ## StarCluster Configuration File ## |

| − | # | + | #################################### |

| − | # | + | [global] |

| − | + | DEFAULT_TEMPLATE=myexample | |

| + | |||

| + | ############################################# | ||

| + | ## AWS Credentials Settings | ||

| + | ############################################# | ||

| + | [aws info] | ||

| + | AWS_ACCESS_KEY_ID = AKImyexample8FHJJF2Q | ||

| + | AWS_SECRET_ACCESS_KEY = fthis_was_my_example_secretMqkMIkJjFCIGf | ||

| + | AWS_USER_ID=199998888709 | ||

| + | |||

| + | AWS_REGION_NAME = us-east-1 # Choose your own region | ||

| + | AWS_REGION_HOST = ec2.us-east-1.amazonaws.com | ||

| + | AWS_S3_HOST = s3-us-east-1.amazonaws.com | ||

| + | |||

| + | ########################### | ||

| + | ## EC2 Keypairs | ||

| + | ########################### | ||

| + | [key <font color='green'>east1_starcluster</font>] | ||

| + | KEY_LOCATION = ~/.ssh/AWS/east1_starcluster_key.rsa # Same region | ||

| + | |||

| + | ########################################### | ||

| + | ## Define Cluster | ||

| + | ## starcluster start -c east1_starcluster nameichose4cluster | ||

| + | ########################################### | ||

| + | [cluster <font color='red'>myexample</font>] # Name of this cluster definition | ||

| + | KEYNAME = <font color='green'>east1_starcluster</font> # Name of keys I need | ||

| + | CLUSTER_SIZE = 4 # Number of nodes | ||

| + | CLUSTER_SHELL = bash | ||

| + | |||

| + | # Choose the base AMI using starcluster listpublic | ||

| + | # (http://star.mit.edu/cluster/docs/0.93.3/faq.html) | ||

| + | NODE_IMAGE_ID = ami-765b3e1f | ||

| + | AVAILABILITY_ZONE = us-east-1 # Region again! | ||

| + | NODE_INSTANCE_TYPE = m1.medium # 4G memory is the minimum for GotCloud | ||

| + | |||

| + | VOLUMES = <font color='orange'>gotcloud</font>, <font color='blue'>mydata</font> | ||

| + | [volume <font color='blue'>mydata</font>] | ||

| + | VOLUME_ID = vol-6e729657 | ||

| + | MOUNT_PATH = /mydata | ||

| + | |||

| + | [volume <font color='orange'>gotcloud</font>] | ||

| + | VOLUME_ID = vol-56071570 | ||

| + | MOUNT_PATH = /gotcloud | ||

| + | </code> | ||

| − | + | '''Create Your Cluster''' | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | <code> | |

| − | + | '''starcluster start -c <font color='red'>myexample</font> myseq-example''' | |

| − | + | StarCluster - (http://web.mit.edu/starcluster) (v. 0.93.3) | |

| − | + | Software Tools for Academics and Researchers (STAR) | |

| − | + | Please submit bug reports to starcluster@mit.edu | |

| − | + | ||

| − | + | >>> Validating cluster template settings... | |

| + | >>> Cluster template settings are valid | ||

| + | >>> Starting cluster... | ||

| + | [lines deleted] | ||

| + | >>> Mounting EBS volume vol-32273514 on /gotcloud... | ||

| + | >>> Mounting EBS volume vol-36788522 on /mydata... | ||

| + | [lines deleted] | ||

| + | </code> | ||

| − | + | When this completes, you are ready to run the GotCloud software on your data. | |

| − | + | Make sure you have defined and mounted volumes for your sequence data and the | |

| − | + | output steps of the aligner and umake. | |

| − | + | These volumes (as well as /gotcloud) should be available on each node. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | <code> | ||

| + | '''starcluster sshmaster myseq-example''' | ||

| + | StarCluster - (http://web.mit.edu/starcluster) (v. 0.93.3) | ||

| + | Software Tools for Academics and Researchers (STAR) | ||

| + | [lines deleted] | ||

| + | |||

| + | '''df -h''' | ||

| + | '''ssh node001 df -h''' | ||

</code> | </code> | ||

| + | |||

| + | If your data is visible on each node, you're ready to run the software as described | ||

| + | in [[GotCloud]]. | ||

Latest revision as of 10:49, 7 November 2014

Back to the beginning: GotCloud

Back to GotCloud: Amazon

If you have access to your own cluster, your task will be much simpler. Install the GotCloud software (GotCloud: Source Releases) and run it as described on the same pages.

For those who are not so lucky to have access to a cluster, Amazon Web Services (AWS) provides an alternative. You may run the gotcloud software on a cluster created in AWS. One tool that makes the creation of a cluster of AMIs (Amazon Machine Instances) is StarCluster (see http://star.mit.edu/cluster/).

The following shows an example of how you might use StarCluster to create an AWS cluster and set it up to run GotCloud. There are many details setting up starcluster and this is not intended to explain all of the many variations you might choose, but should provide you a working example.

Getting Started With StarCluster

StarCluster provides lots of documentation (http://star.mit.edu/cluster/) which will provide more information on it than we have here.

To install and setup StarCluster for the first time, you can follow the QuickStart instructions: http://star.mit.edu/cluster/docs/latest/quickstart.html

- Includes installation instructions

- See http://star.mit.edu/cluster/docs/latest/installation.html for more detailed StarCluster installation instructions if the QuickStart instructions are not enought (especially if running on Windows)

- Includes setting up a basic StarCluster configuration file

- You will need your AWS Credentials to setup the configuration file

- If you need help setting up your AWS credentials, see: AWS Credentials

- You will need your AWS Credentials to setup the configuration file

You can skip actually starting the cluster in the QuickStart instructions if you want.

Troubleshooting: When I tried this, the starcluster start mycluster step failed similar to:

- http://star.mit.edu/cluster/mlarchives/2425.html

- So I followed the suggestions there and at https://github.com/jtriley/StarCluster/issues/455:

$ sudo pip uninstall boto

$ sudo easy_install boto==2.32.0

- I was having trouble with pip install, but found that easy_install worked

- I had to force terminate mycluster after a failed start:

starcluster terminate -f mycluster

- Then I was able to successfully start my cluster

Don't forget to terminate your cluster:

starcluster terminate mycluster

StarCluster and GotCloud

StarCluster Config Settings

By default, StarCluster expects a configuration file in ~/.starcluster/config.

- StarCluster will create a model file for you

Ensure your StarCluster configuration file is set for your usage.

- General AWS Settings:

[aws info] aws_access_key_id = #your aws access key id here aws_secret_access_key = #your secret aws access key here aws_user_id = #your 12-digit aws user id here

- You should have set these in Getting Started With StarCluster above (quickstart guide and AWS Credentials) .

- GotCloud Cluster Definition

- You may want to create a new cluster section for running GotCloud (or you can use smallcluster) in your configuration file:

~/.starcluster/config - You can call it anything you want, for example,

gccluster

- Example:

[cluster gccluster] KEYNAME = mykey CLUSTER_SIZE = 4 CLUSTER_USER = sgeadmin CLUSTER_SHELL = bash MASTER_IMAGE_ID = ami-6ae65e02 NODE_IMAGE_ID = ami-3393a45a NODE_INSTANCE_TYPE = m3.large

- Set

KEYNAMEto the key you want to use - Set

CLUSTER_SIZEto the number of nodes you want to start up (this may be different than 4) - Set

CLUSTER_USERto add additional users, likesgeadmin - Set

CLUSTER_SHELLto define the shell you want to use, likebash - Set

MASTER_IMAGE_IDto the latest GotCloud AMI, see: GotCloud: AMIs- Contains GotCloud, the reference, and the demo files in the /home/ubuntu/ directory that will be visible on all nodes in the cluster

- Has a 30G volume, but only 6G available

- Set

NODE_IMAGE_IDto a StarClusterubuntu x86_64AMI- Since each node does not need its own 30G volume containing GotCloud, the reference, and the demo files, we use a separate image for the nodes.

- The nodes can just access the master's copy of GotCloud, the reference, and the Amazon demo

- Set

NODE_INSTANCE_TYPEto the type of instances you want to start in your cluster- See http://aws.amazon.com/ec2/pricing/ for instance descriptions and prices

- We do not recommend running GotCloud on machines with less than 4MB of memory

- The

CLUSTER_SIZE* CPUs inNODE_INSTANCE_TYPE= the number of jobs you can run concurrently in GotCloud

- You may want to create a new cluster section for running GotCloud (or you can use smallcluster) in your configuration file:

- Define Data Volumes

- By default, the GotCloud AMI contains about 5G of extra space that you can use

- /home/ubuntu/ directory is visible from all machines

- Use /home/ubuntu/ for the output directory if it is <5G

- This directory will be deleted when you terminate the AMI

- /home/ubuntu/ directory is visible from all machines

- Create your Own Volumes and attach them to the GotCloud cluster

- Instructions TBD

- By default, the GotCloud AMI contains about 5G of extra space that you can use

Starting the Cluster

- Start the cluster:

starcluster start -c gccluster mycluster

- Alternatively, if you can the default template at the start of the configuration file in the

[global]section to gccluster:DEFAULT_TEMPLATE=gccluster, you can run:starcluster start mycluster

- Alternatively, if you can the default template at the start of the configuration file in the

- It will take a few minutes for the cluster to start

Copying Data to/from the Cluster

Copy data onto the cluster (command run from your local machine)

starcluster put /path/to/local/file/or/dir /remote/path/

Pull the data from the cluster onto your local machine (command run from your local machine)

starcluster get /path/to/remote/file/or/dir /local/path/

Reminder, if you write your output to /home/ubuntu/, it will be deleted when you terminate the cluster

Running GotCloud on StarCluster

- If you have not already, logon to the cluster as ubuntu:

starcluster sshmaster -u ubuntu mycluster

- Type

yesif the terminal asks if you want to continue connecting

- Type

- When running GotCloud:

- Set the cluster/batch type in either configuration or on the command line:

- In Configuration:

BATCH_TYPE = sgei

- On the command-line:

--batchtype sgei

- In Configuration:

- Set the number of jobs to run:

--numjobs #

- Replace number with the number of concurrent jobs you want to run (probably

CLUSTER_SIZE* CPUs inNODE_INSTANCE_TYPE)

- Otherwise, run GotCloud as you normally would.

- Set the cluster/batch type in either configuration or on the command line:

To login to a specific non-master node, do:

starcluster sshnode -u ubuntu mycluster node001

Monitoring Cluster Usage

- Monitor jobs in the queue

- View Sun Grid Engine Load

- View the average load per node using:

Terminate the Cluster

- Reminder, check if you need to copy any data off of the cluster that will be deleted upon termination

- Terminate the cluster

starcluster terminate mycluster

Run GotCloud Demo Using StarCluster

- Create a new cluster section in your configuration file:

~/.starcluster/config- Add the following to the end of the configuration file:

[cluster gccluster] KEYNAME = mykey CLUSTER_SIZE = 4 CLUSTER_USER = sgeadmin CLUSTER_SHELL = bash MASTER_IMAGE_ID = ami-6ae65e02 NODE_IMAGE_ID = ami-3393a45a NODE_INSTANCE_TYPE = m3.large

- Add the following to the end of the configuration file:

- Start the cluster:

starcluster start -c gccluster mycluster

- Alternatively, if you can the default template at the start of the configuration file in the

[global]section to gccluster:DEFAULT_TEMPLATE=gccluster, you can run:starcluster start mycluster

- Alternatively, if you can the default template at the start of the configuration file in the

- It will take a few minutes for the cluster to start

- Logon to the cluster as ubuntu:

starcluster sshmaster -u ubuntu mycluster

- Type

yesif the terminal asks if you want to continue connecting

- Type

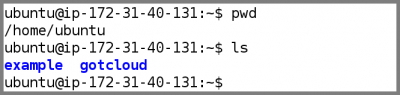

Examine the Setup

- After logging into the Amazon node as the ubuntu user, you should by default be in the ubuntu home directory:

/home/ubuntu- You can check this by doing:

pwd

- This should output:

/home/ubuntu

- Take a look at the contents of the ubuntu user home directory

ls

- This should output be 2 directories,

exampleandgotcloud- The

exampledirectory contains the files for this demo - The

gotclouddirectory contains the gotcloud programs and pre-compiled source

- The

- You can check this by doing:

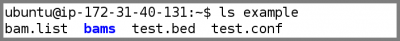

- Look at the example input files:

ls example

bam.listcontains the list of BAM files per samplebamsis a subdirectory containing the BAM files for this demotest.bedcontains the region we want to process in this demo- To make the demo run faster, we only want to process a small region of chromosome 22. This file tells GotCloud the region. The region we are using is the APOL1 region

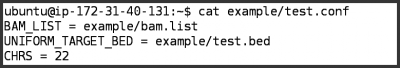

test.confcontains the settings we want GotCloud to use for this run

- For the demo, we want to tell GotCloud:

- The list of bams to use:

BAM_LIST = example/bam.list - The region to process rather than the whole genome:

UNIFORM_TARGET_BED = example/test.bed - The chromosomes to process. The default chromosomes are 1-22 & X, but we only want to process chromosome 22:

CHRS = 22

- The list of bams to use:

- For the demo, we want to tell GotCloud:

Run GotCloud SnpCall Demo

- Run GotCloud snpcall

gotcloud snpcall --conf example/test.conf --outdir output --numjobs 8 --batchtype sgei

- The ubuntu user is setup to have the gotcloud program and tools in its path, so you can just type the program name and it will be found

- There is enough space in /home/ubuntu to put the Demo output

- /home/ubuntu is visible from all nodes in the cluster

- This will take a few minutes to run.

- GotCloud first generates a makefile, and then runs the makefile

- After a while GotCloud snpcall will print some messages to the screen. This is expected and ok.

- See Monitoring Cluster Usage if you are interested in monitoring the cluster usage as GotCloud runs

- When complete, GotCloud snpcall will indicate success/failure

- Look at the snpcall results, see: GotCloud: Amazon Demo -> Examining SnpCall Output

Run GotCloud Indel Demo

- Run GotCloud indel

gotcloud indel --conf example/test.conf --outdir output --numjobs 8 --batchtype sgei

- The ubuntu user is setup to have the gotcloud program and tools in its path, so you can just type the program name and it will be found

- There is enough space in /home/ubuntu to put the Demo output

- /home/ubuntu is visible from all nodes in the cluster

- This will take a few minutes to run.

- See Monitoring Cluster Usage if you are interested in monitoring the cluster usage as GotCloud runs

- When complete, GotCloud indel will indicate success/failure

- Look at the indel results, see: GotCloud: Amazon Demo -> Examining Indel Output

Terminate the Demo Cluster

- Exit out of your master node

exit

- Terminate the cluster

- Since this is just a demo, we don't have to worry about the data getting deleted upon termination

starcluster terminate mycluster

- Answer

yto the questionsTerminate EBS cluster mycluster (y/n)?

- Answer

Old Instructions

StarCluster Configuration Example

StarCluster creates a model configuration file in ~/.starcluster/config and you are instructed to edit this and set the correct values for the variables. Here is a highly simplified example of a config file that should work. Please note there are many things you might want to choose, so craft the config file with care. You'll need to specify nodes with 4GB of memory (type m1.medium) and make sure each node has access to the input and output data for the step being run.

####################################

## StarCluster Configuration File ##

####################################

[global]

DEFAULT_TEMPLATE=myexample

#############################################

## AWS Credentials Settings

#############################################

[aws info]

AWS_ACCESS_KEY_ID = AKImyexample8FHJJF2Q

AWS_SECRET_ACCESS_KEY = fthis_was_my_example_secretMqkMIkJjFCIGf

AWS_USER_ID=199998888709

AWS_REGION_NAME = us-east-1 # Choose your own region

AWS_REGION_HOST = ec2.us-east-1.amazonaws.com

AWS_S3_HOST = s3-us-east-1.amazonaws.com

###########################

## EC2 Keypairs

###########################

[key east1_starcluster]

KEY_LOCATION = ~/.ssh/AWS/east1_starcluster_key.rsa # Same region

###########################################

## Define Cluster

## starcluster start -c east1_starcluster nameichose4cluster

###########################################

[cluster myexample] # Name of this cluster definition

KEYNAME = east1_starcluster # Name of keys I need

CLUSTER_SIZE = 4 # Number of nodes

CLUSTER_SHELL = bash

# Choose the base AMI using starcluster listpublic

# (http://star.mit.edu/cluster/docs/0.93.3/faq.html)

NODE_IMAGE_ID = ami-765b3e1f

AVAILABILITY_ZONE = us-east-1 # Region again!

NODE_INSTANCE_TYPE = m1.medium # 4G memory is the minimum for GotCloud

VOLUMES = gotcloud, mydata

[volume mydata]

VOLUME_ID = vol-6e729657

MOUNT_PATH = /mydata

[volume gotcloud]

VOLUME_ID = vol-56071570

MOUNT_PATH = /gotcloud

Create Your Cluster

starcluster start -c myexample myseq-example

StarCluster - (http://web.mit.edu/starcluster) (v. 0.93.3)

Software Tools for Academics and Researchers (STAR)

Please submit bug reports to starcluster@mit.edu

>>> Validating cluster template settings...

>>> Cluster template settings are valid

>>> Starting cluster...

[lines deleted]

>>> Mounting EBS volume vol-32273514 on /gotcloud...

>>> Mounting EBS volume vol-36788522 on /mydata...

[lines deleted]

When this completes, you are ready to run the GotCloud software on your data. Make sure you have defined and mounted volumes for your sequence data and the output steps of the aligner and umake. These volumes (as well as /gotcloud) should be available on each node.

starcluster sshmaster myseq-example

StarCluster - (http://web.mit.edu/starcluster) (v. 0.93.3)

Software Tools for Academics and Researchers (STAR)

[lines deleted]

df -h

ssh node001 df -h

If your data is visible on each node, you're ready to run the software as described in GotCloud.